The Software-Defined Stack | EII OpenStack Networking

In this post, we are going take a quick look at how OpenStack does networking, along the process we are going to mention the important agents involved.

- Tiny Networking Capsule

- Nova-network

- Neutron Networking

- Meta-data service

- Summary

It is a network that does require any routing hops (e.g., traffic in the same subnet).

- Usually, a switch does not divide devices connected to it into multiple domains, all are part of the sane broadcast domain.

- To break a broadcast domain VLANs are used (tagging traffic), the VLAN tagging types are:

- Access port: configured for access to one-vlane (i.e., a one tag port)

- Trunk port: can recieve frames tagged / labled from different VLANs

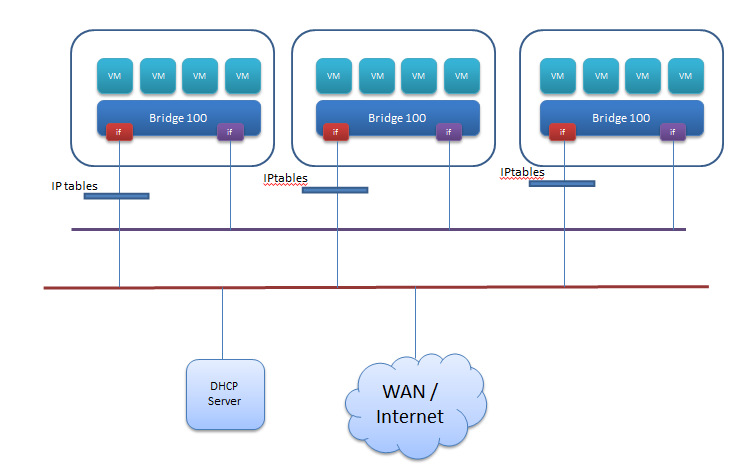

It is the primitive way of doing networking in OpenStack. It does the following:

- Basic layer-2 bridging through Linux bridges

- IP Address management for tenants (give IP address and management for tenants)

- Configure DHCP and DNS entries in "dnsmasq"

- Configure fw-policies and NAT in IPTables to build security for in/out-bound traffic

- FlatNetwork – no traffic segregation on the host level, VLANs and network functions are set at the network level

- VLANetwork – each tenant gets one VLAN (the VLAN limit) + dnsmasq service that does DHCP functions on host level

Problems in Nova-Networking?

- Inherits the VLAN sizing limitations (e.g., 4094 VLAN IDs).

- Since it is coupled with Nova (the compute part of OS), it is very limited and does not allow integrations with networking products from other vendors

- Poor at multi-tenancy (Flat-network)

- No Support for L3 devices

What is Neutron?

Neutron is an API wrapper. It basically receives commands / calls to perform traditional networking and relay it to the network plugin of choice (e.g. NSX). With this, abstraction is achieved.

What are the main components of Neutron?

Neutron runs along-side a controller node on what is referred to as the network node. On the network node, the following services are running:

- Neutron Server + Open vSwitch (OVS) Plugin – controls all the agents running on the network node

- N-L3Agent – controls namespaces and ip-tables and does all the NAT functions

- N-L3-DHCP – Assigning IPs to VMs and acting as a DHCP server (i.e., controls the “dnsmasq” MAC to IP mappings)

- N-OVS-Agent – talks to the switch to configure flows for traffic

Basically, the OVS plugin communicates with the OVS-agent which in turn configures the flows and tunnles on the underlying bridges. The ovs-agents are installed on all the nodes (compute and network). When the traffic is forwarded it gets tunneled in the bridge through the configuration applied to by the OVS agent.

Note: This is not a completely software-defined pattern as we still have the control-plane coupled in our virtual network. By adding a controller we could better comply with the software-defined pattern (will address that in a different post).

Nova Meta-data service

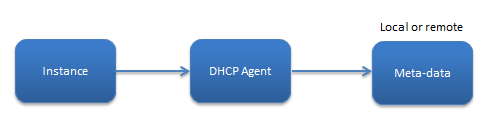

A Metadata services provides important information to the guest instance for correct communication, these include:

- Setting a default locale or a host name

- Setting up ephemeral storage mount points

- Generate SSH private keys, and add SSH keys to us user’s .ssh directory

In later versions of OpenStack (e.g., Grizzly, Havana, Icehouse, Juno, etc.) the problem was solved by dedicating two services (quantum-ns-metadata-proxy and quantum-metadata-proxy). Meta-data requests are then forwarded by the N-L3-Agent to the quantum-ns-metadata-proxy in the tenant namespace and then forwards it to the quantum-metadata-proxy service via a dedicated internal socket, and adding two headers that identifies the tenant namespace (X-Forward-For and X-Quantum-Router-ID) and this solving the overlapping IP problem previously existed. Since the metadata-proxy is the only service that can reach to the management network, it will be used to forward the request to the nova-metadata which could be installed on one of the nodes in the management network.

Summary

In this post we reviewed nova-networking (deprecated) that was used before neutron, we also highlighted the problems that led to the development of Neutron. With Neutron, many extra functionalities were added (e.g., tunneling and improved multi-tenancy through the usage of IDs and headers), it should be noted that we did not address all the aspects of Neutron only those of major importance. In future writings, we will be tackling deeper topics and we will be looking more into the other components of OpenStack.